I am currently a research scientist for the Language Information Access Technology (LIAT) team , RIKEN AIP under the supervision of Prof. Satoshi Sekine. I am based in Seattle and have extensive knowledge in machine learning, particularly deep learning and generative AI, not only fine-tuning models but also designing and developing novel architectures and publishing them.

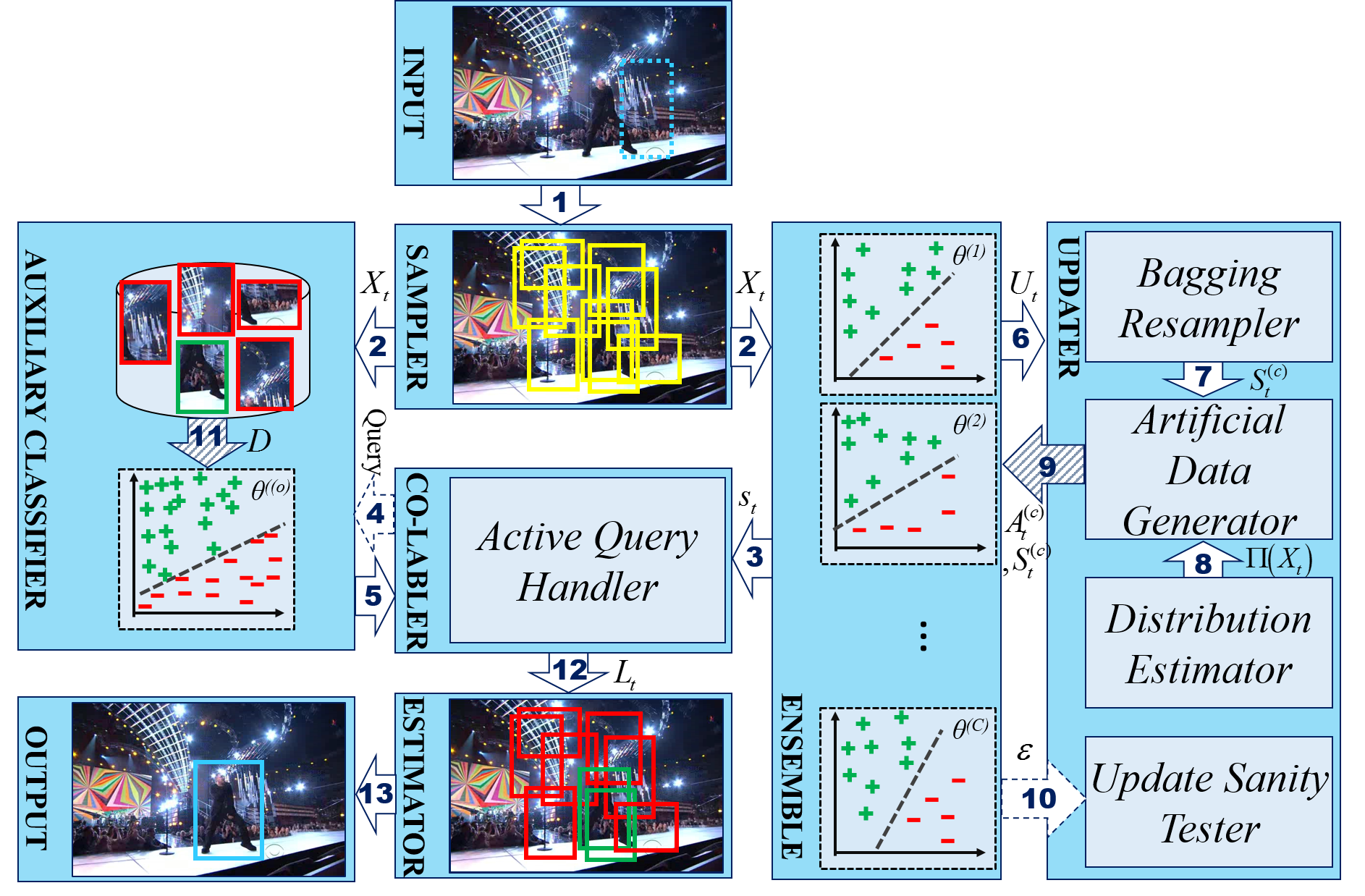

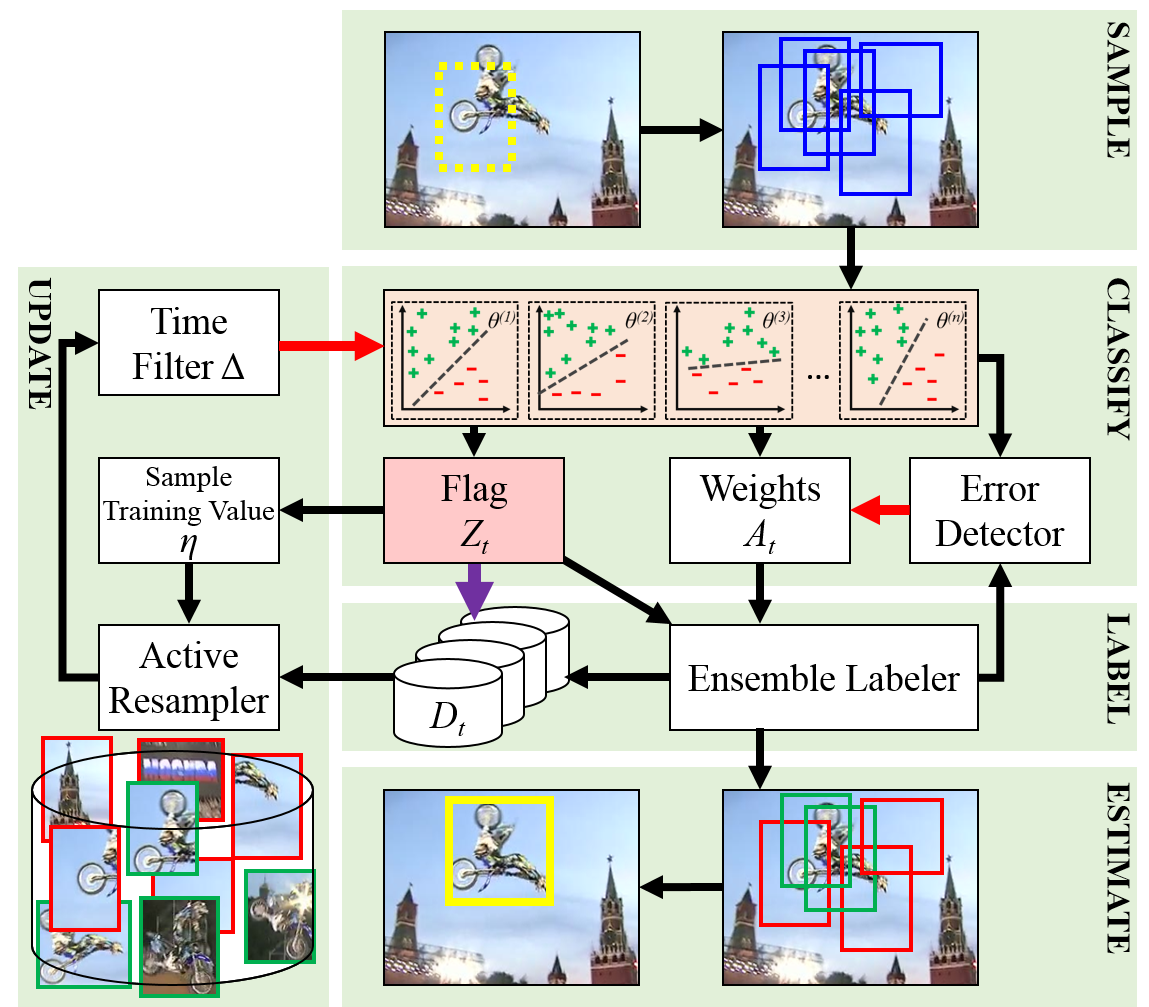

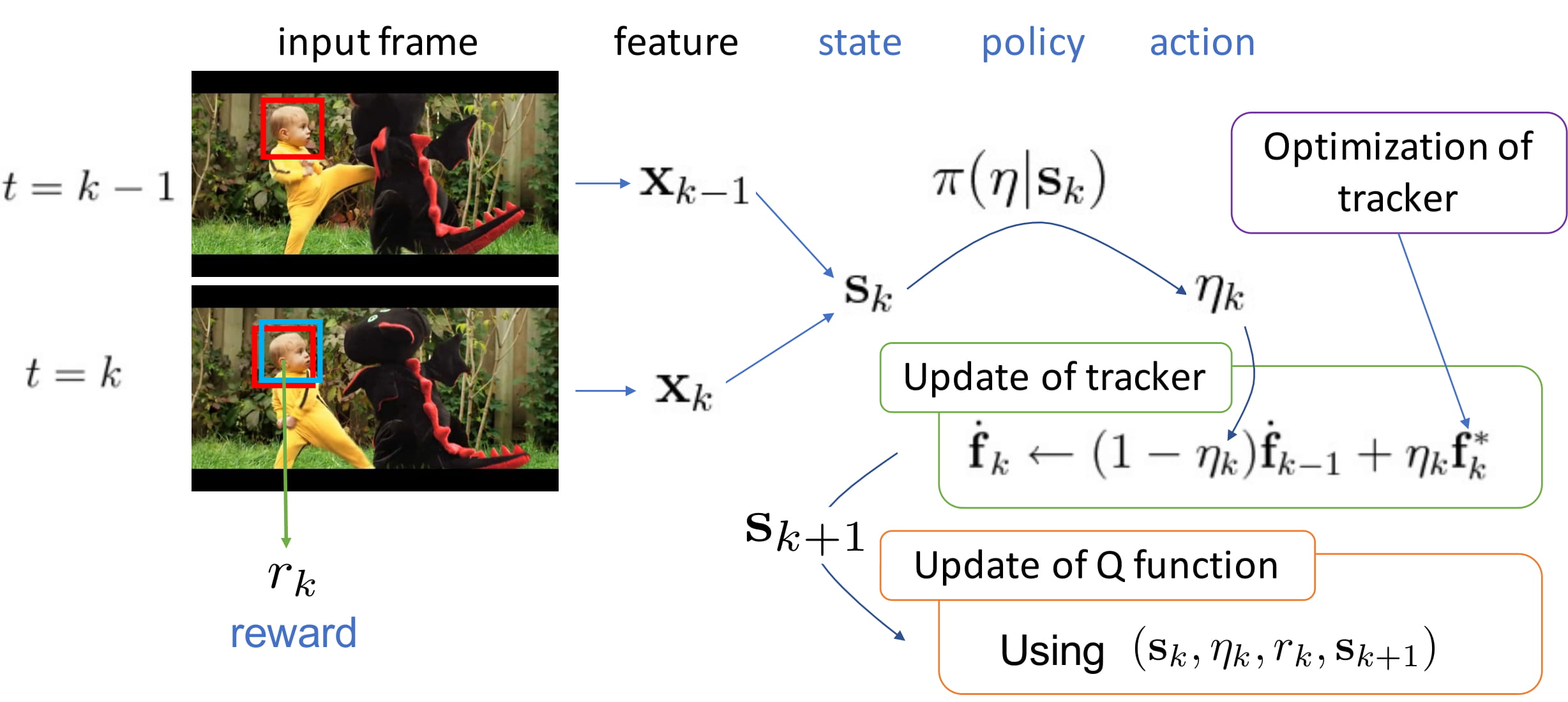

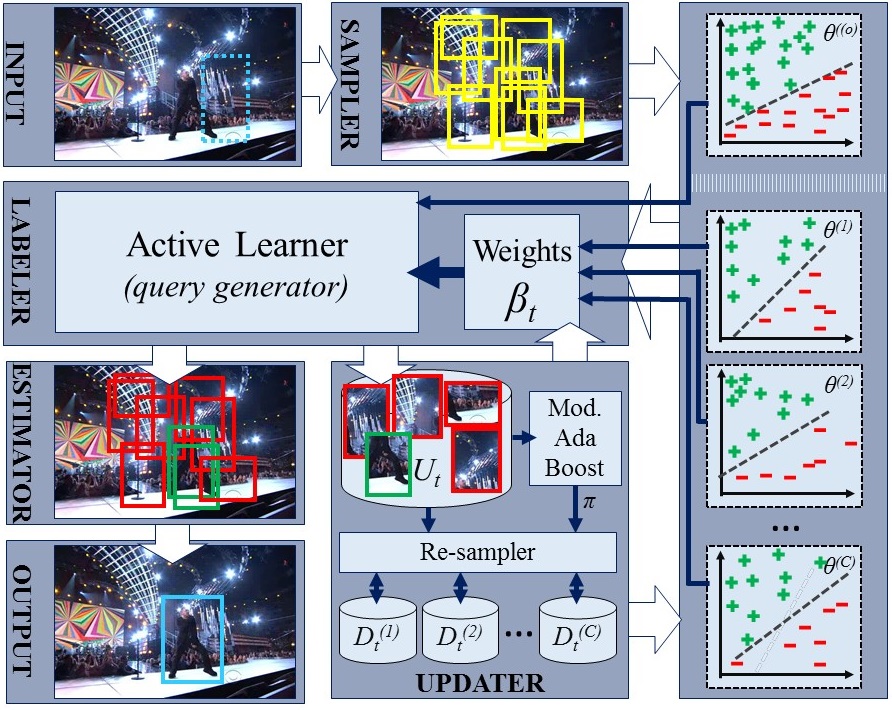

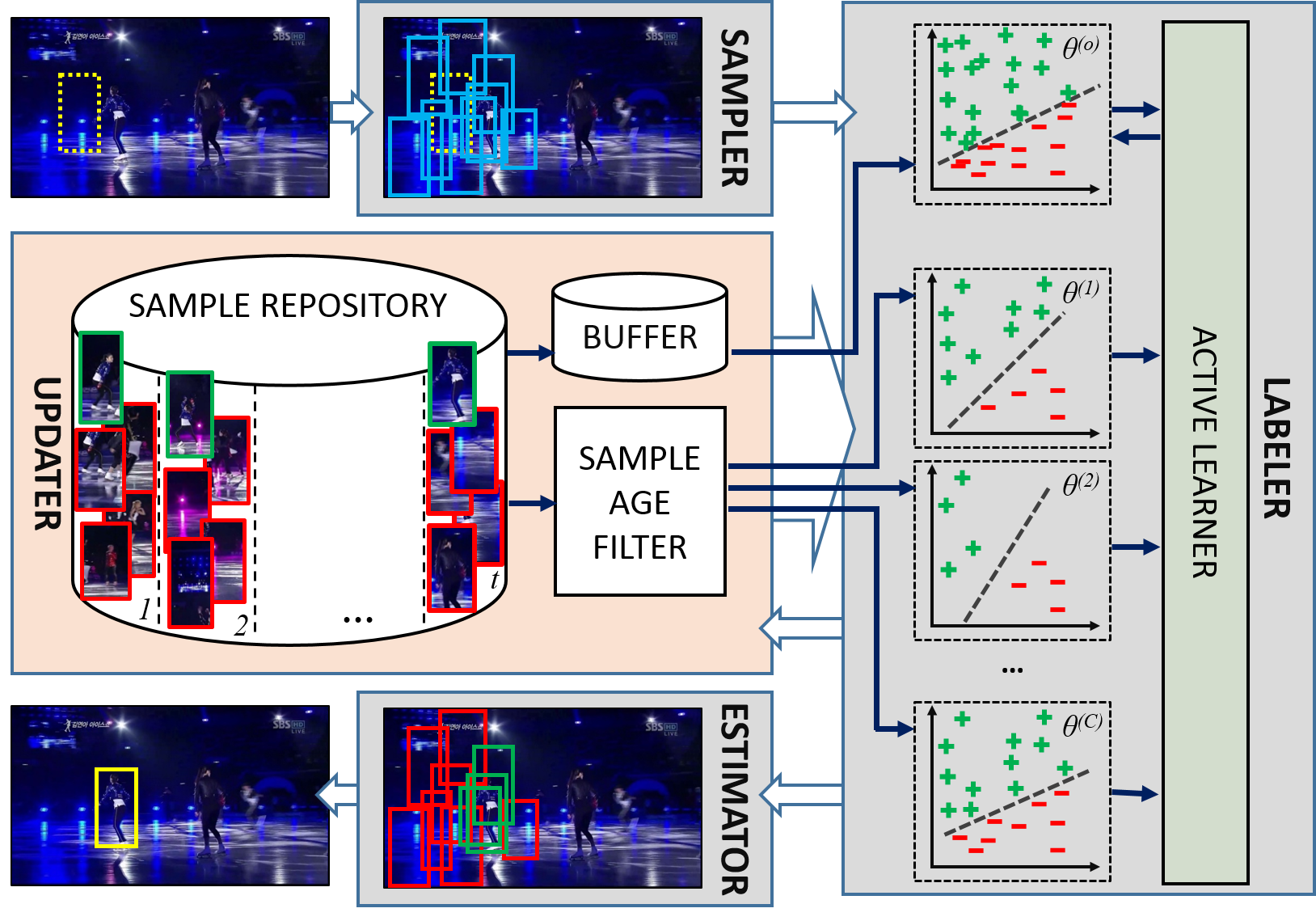

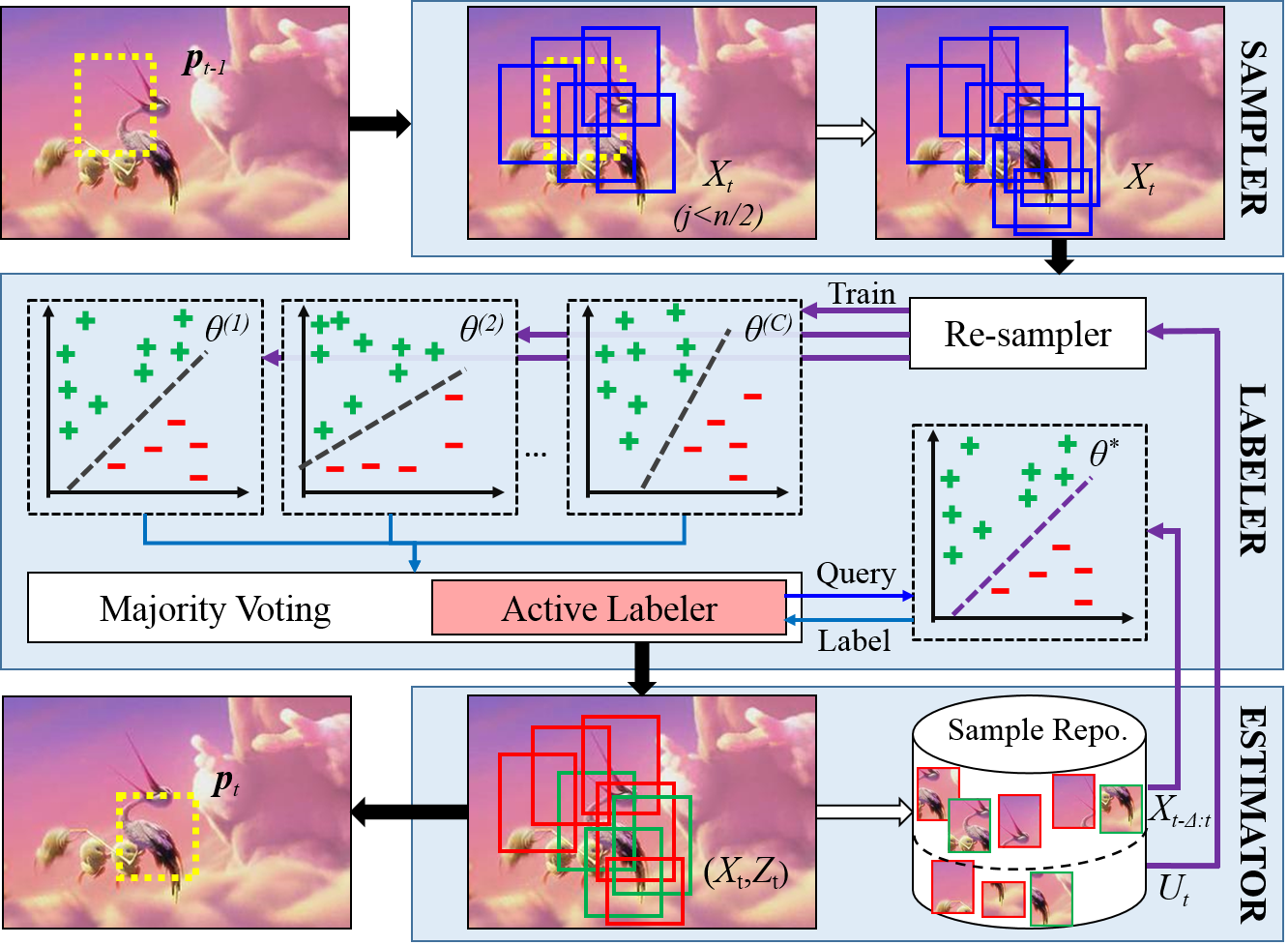

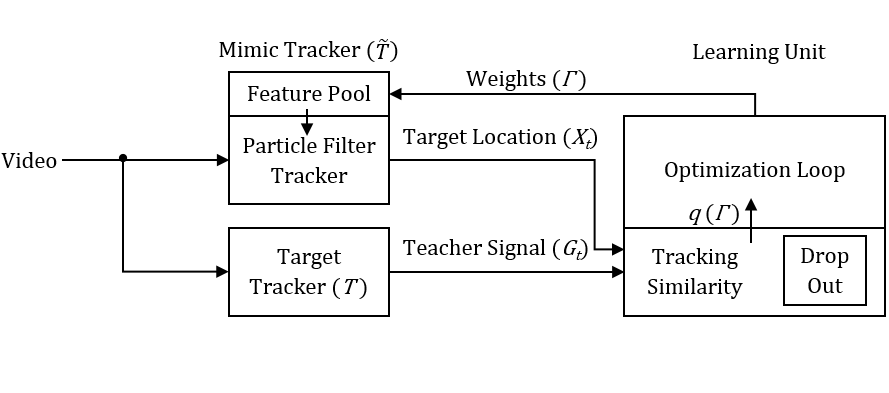

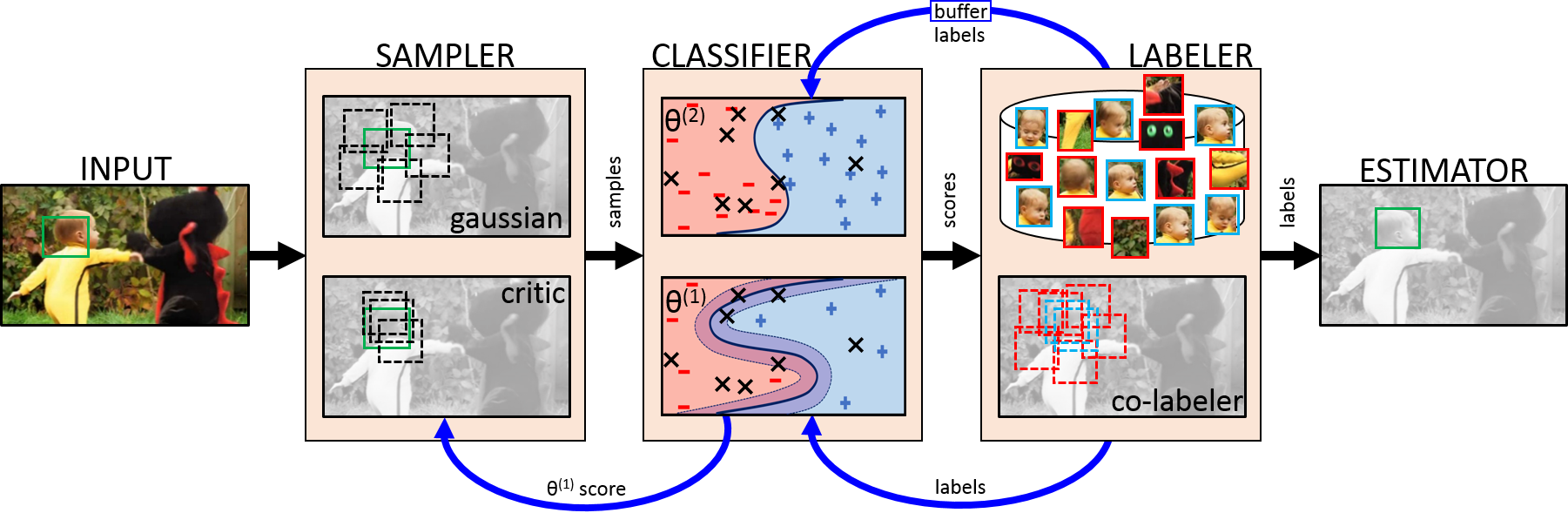

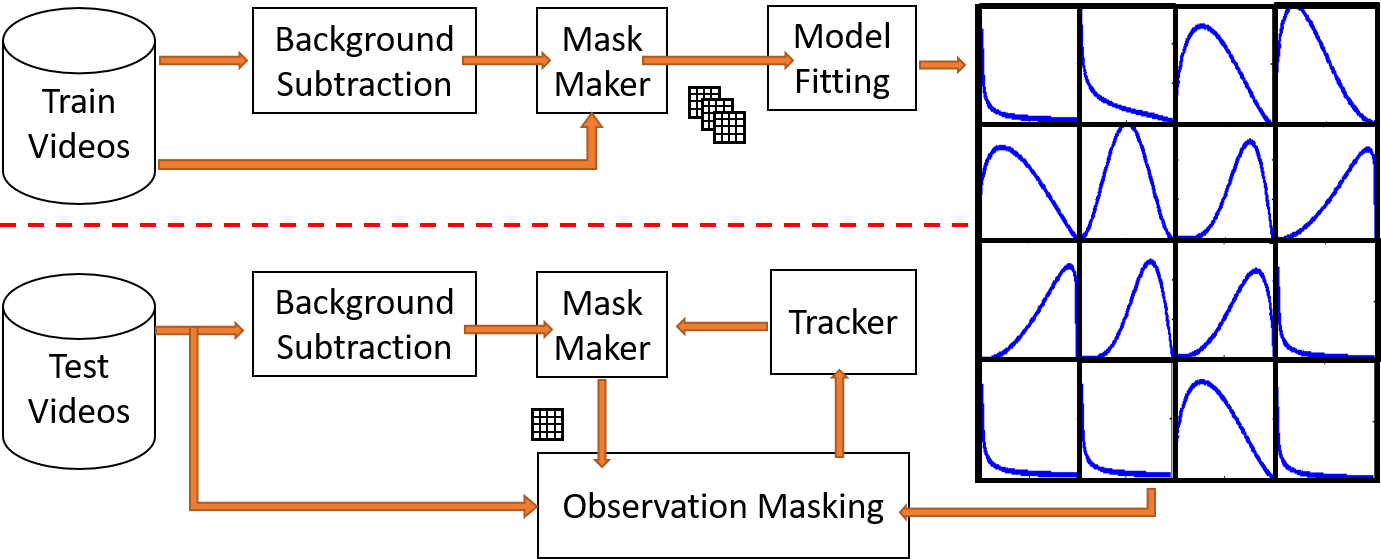

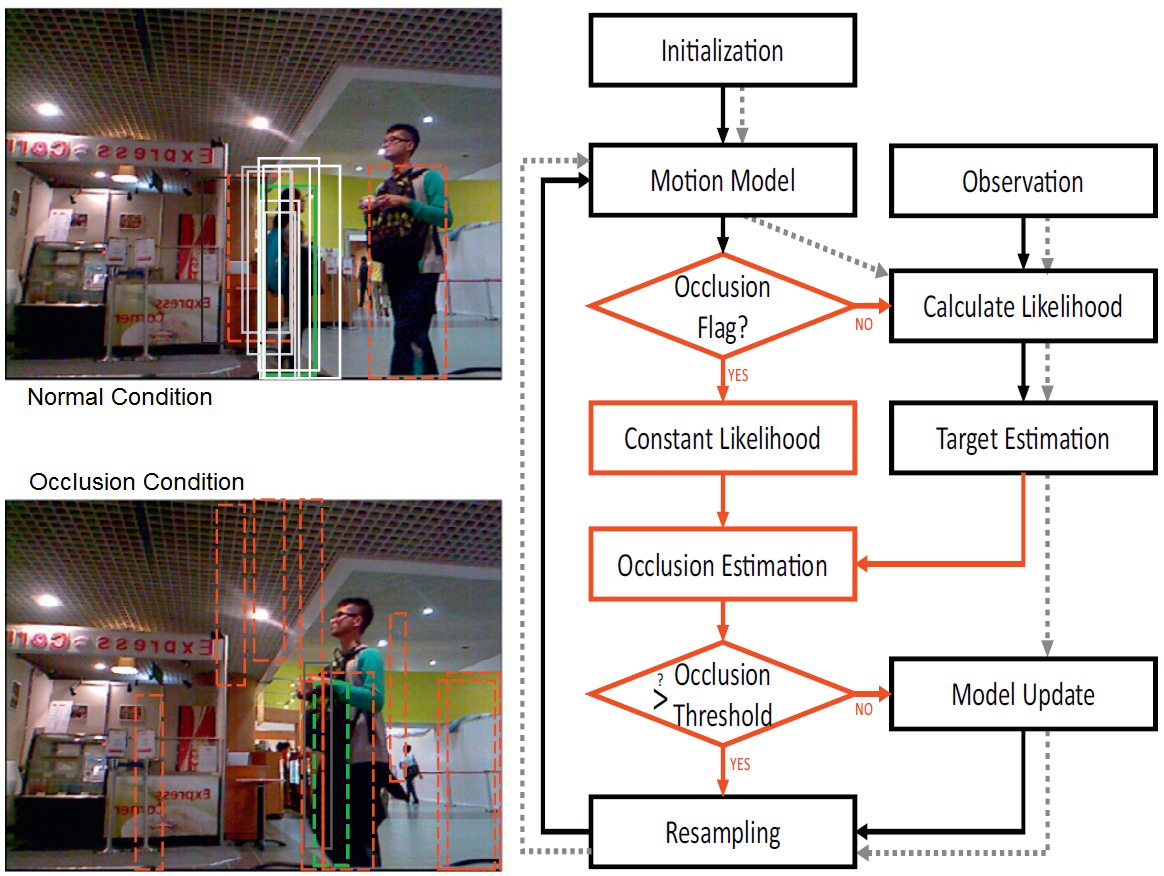

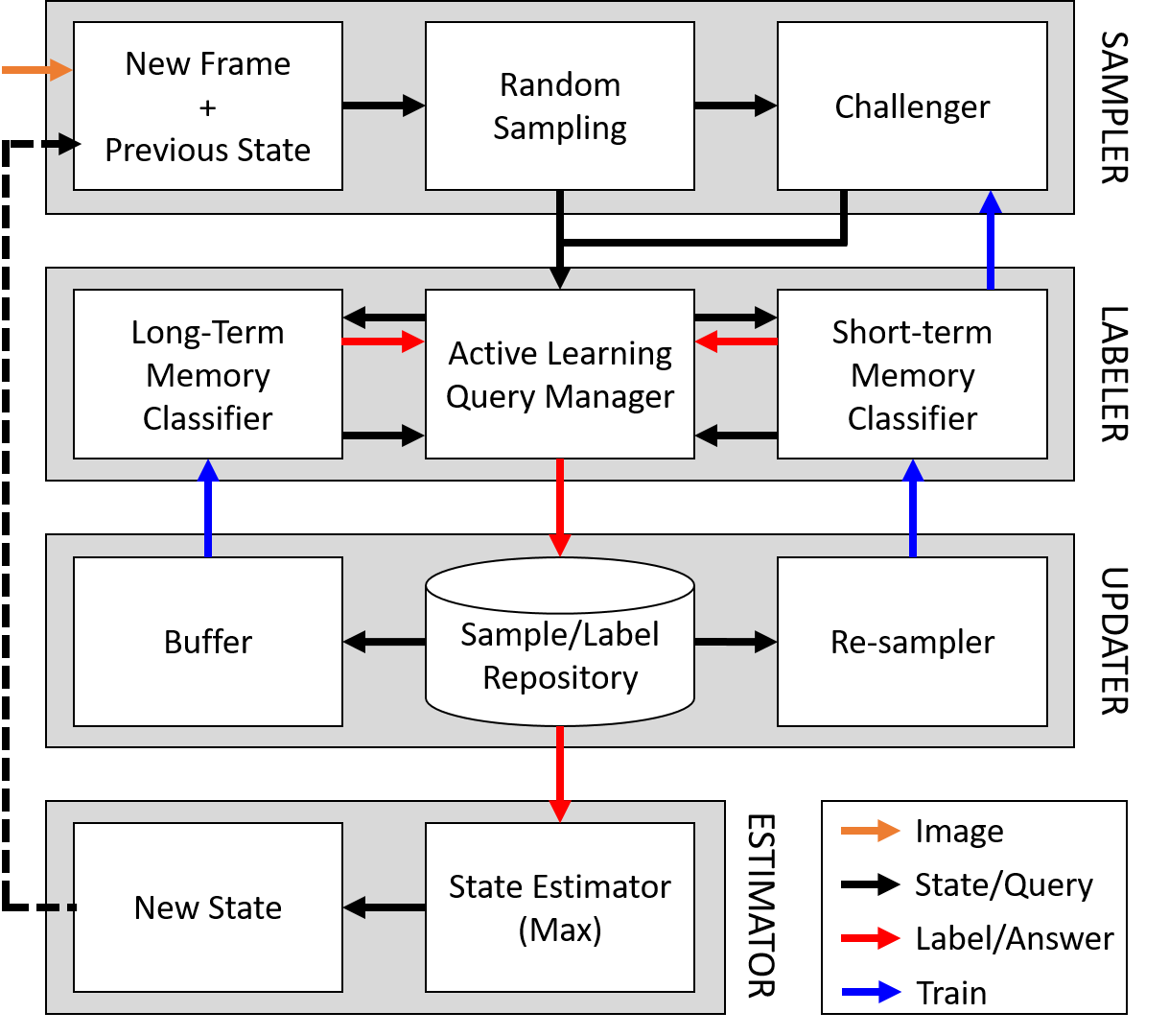

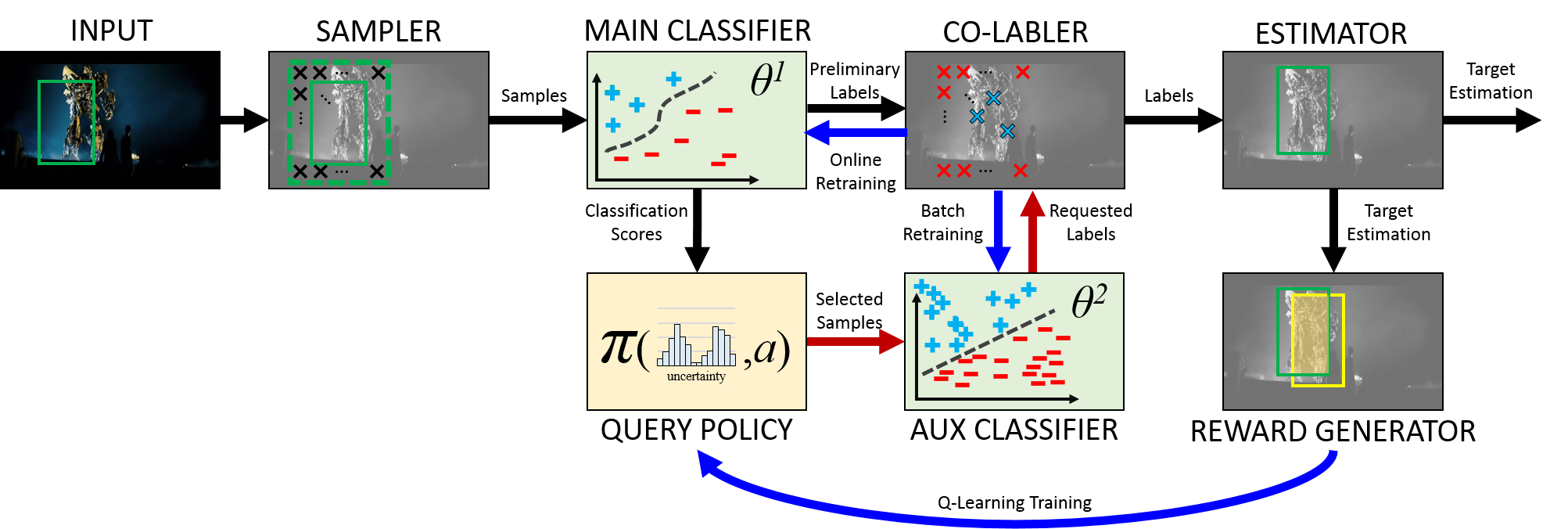

I work on transferring my knowledge from CV to NLP to help knowledge extraction from text, constructing a bridging between large language models (LLMs) and CV tasks, structuring it for higher-level inferences using multi-task learning, as well as delving into the realm of transformers and employing them to solve problems quickly and reliably. Before that, I was a postdoctoral fellow at the Graduate School of Informatics at Kyoto University, where as a member of the Ishii lab, I worked with Prof. Shin Ishii and Dr. Shigeyuki Oba on the use of active learning in visual tracking and scene understanding, funded by the project of strategic advancement of multi-purpose ultra-human robot and artificial intelligence technologies (SAMURAI) from the New Energy and Industrial Technology Development Organization (NEDO) and Post-Kei project. I am also collaborating with Biochemical Simulation Lab from RIKEN QBiC, RIKEN AIP's Machine Intelligence for Medical Engineering Team, and Neural Computation Unitof OIST on the Exploratory Challenges of Post-K project. I also collaborate with Human-AI Communication team of RIKEN AIP under the supervision of Prof. Toyoaki Nishida.

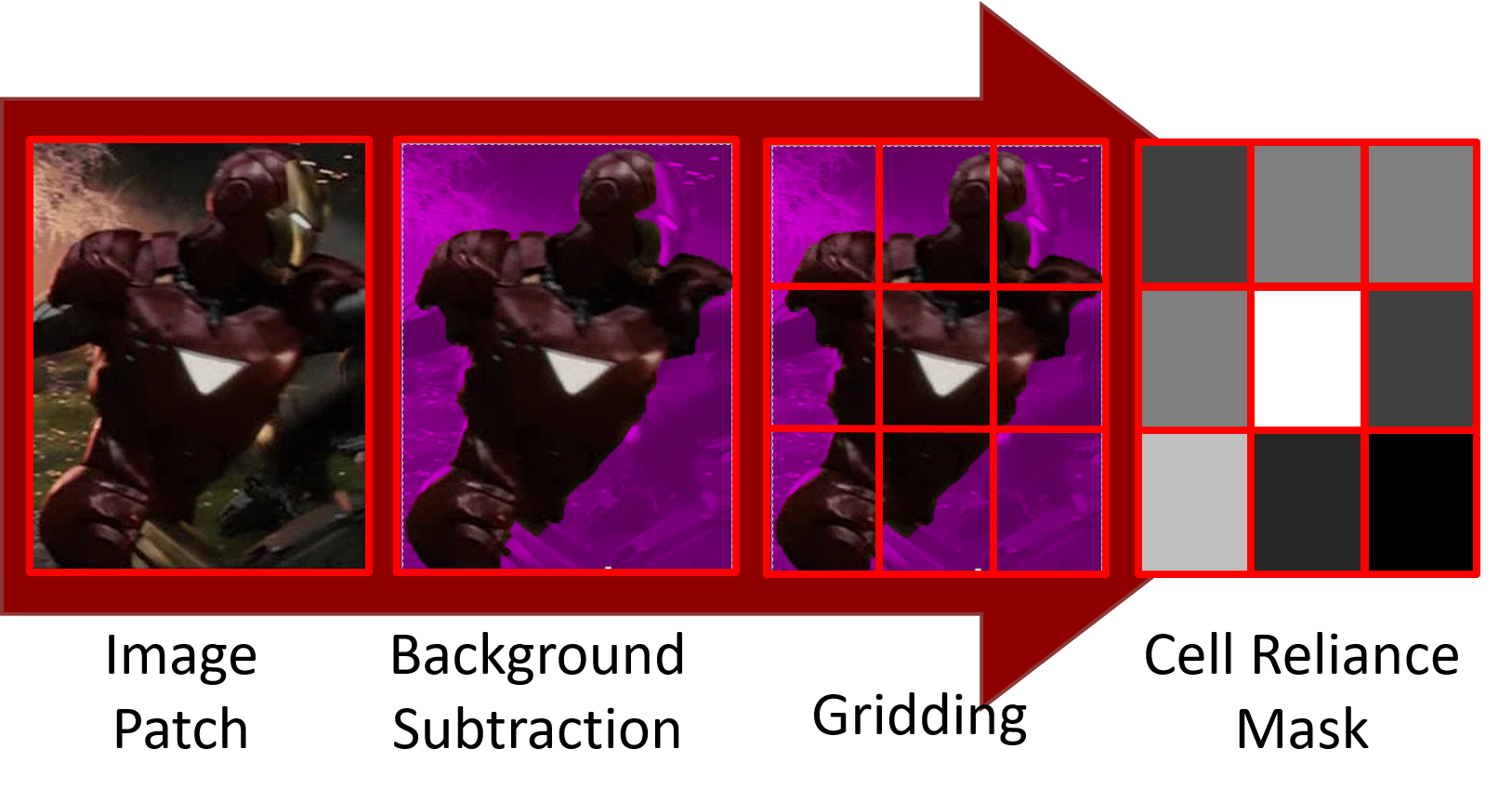

I finished my Ph.D. under the supervision of Prof. Shin Ishii in the same lab, where I worked on occlusion-aware visual tracking, 3D object reconstruction, and imitation learning. In particular, my Ph.D. dissertation was about particle filter-based tracking to handle persistent and complex occlusions by proposing novel occlusion-aware appearance model and context-aware motion model in this framework.

Bio: Kourosh Meshgi received his B.Sc. and M.Sc. in Hardware Engineering (2008) and Artificial Intelligence (2010) respectively, from Tehran Polytechnic and his Ph.D. in Informatics in 2015 from Kyoto University. He is currently a research scientist at RIKEN AIP (remotely working from Seattle, WA) and a guest researcher at Kyoto University. His research interests include machine learning, natural language processing, computer vision, robotics, and computational linguistics. Dr. Meshgi's current research focuses on machine learning, generative AI, and designing, training, deploying, fine-tuning, interpreting, explaining, and orchestrating deep learning models on NLP and CV tasks.